There are three critical pieces to cybersecurity functionality: Prevention, Detection and Response. Prevention puts up roadblocks for adversaries aiming to conduct malicious activities, while response triages incidents after they occur. The middle piece is most crucial – detection identifies active threats, thereby reducing financial impact, helping to inform prevention measures and minimizing the time hackers have to cause harm within an environment.

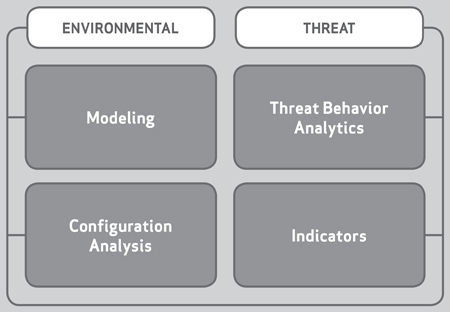

Modern threat detection falls into one of four categories: Configuration, Modeling, Indicator and Threat Behavior. Each is different, and it’s up to the organization to determine what they need and whether a new method can complement existing security tools.

Threat detection rose to prominence as the internet transitioned from a small group of friendly parties to an open collective now including people who would do harm to others. It began in earnest in the mid-1980s following the publication of Dorothy Denning’s intrusion detection model. The first commercial intrusion detection system (IDS) launched in the early 1990s, and anti-virus products – early elements of threat detection – became fully established in that decade as well. Commercial products began to standardize host- and network-based anomaly detection, but as the space evolved and threats changed, the industry began shifting from exclusively using indicators of compromise (IOCs) and signatures, to methods like machine learning and behavior analysis.

For the last few decades, collecting and storing data has been expensive, which has limited our capabilities. Now, as the price of computing has dropped, what was once impossible due to limited storage (behavior analysis) and computing (machine-learning) is now possible. Both of these approaches can now be worked into defensive strategies and existing security models; however, buzzwords can overpromise undeliverable results.

Though there is no one-size-fits-all detection strategy, grouping the four types of threat detection provides a collection of approaches that defenders can use and identify which one, or group, best work in their environment.

The first step to any security strategy is threat modeling. Creating an inventory of the system, the most vulnerable assets and relevant threats will help drive organizations’ threat detection decision-making and prevent overburdening security operations. In order for your detection strategy to be successful, so too must your threat model.

The first threat detection category, Configuration-based detection, identifies changes from a known architecture, like two devices communicating with each other over the same ports on regular intervals. Let’s say one device, a Programmable Logic Controller (PLC) in a field environment, regularly and exclusively communicates to an Engineering Workstation (EWS) at the company’s headquarters over TCP 102. Configuration-based detection could detect a potentially malicious deviation if the EWS began communicating to a device outside those ports and protocols.

This detection type could theoretically identify all malicious activity, and it’s easy to maintain if the environment does not change. However, it does not work well for identifying bad actors on systems requiring frequent modifications. This method also requires defenders to have an in-depth and hands-on knowledge of the system architecture and configurations.

Modeling-based detection is similar to configuration-based methods; however, where configuration relies on expert human knowledge of environments, modeling is based on mathematical approaches that assume detection tools can identify bad activity from good. Modeling begins from an automated approach, often using machine learning, to establish a baseline of the system activity and configurations. Then the detection engine automatically alerts on activity that differs from what it identifies as normal. This method also has drawbacks; although system operators will get alerts on the malicious activity, modeling doesn’t provide any additional context about the activity itself to help triage or further investigate an incident. It’s also possible that when a company implements this method with a hacker already inside their environment, malicious activity can be included in the “safe” baseline.

The 4 Types of Threat Detection

Indicator-based detection can be created and deployed quickly. Indicators of compromise (IOCs) are what analysts use to identify malicious activity including hash values, IP addresses, domain names or malware signatures. This type of detection can provide some context to the threat and is most useful when paired with other methods – modeling can automatically detect a deviation from the baseline and analysts can use indicators to augment the data. However, an indicator must first be observed, so this method is somewhat reactive. Indicators can be found in the observed environment directly, incorporated into defensive mechanisms through automated detection tools or on open source directories like VirusTotal. Further, an indicator is only as good as long as it’s relevant – an adversary can change their infrastructure, like command and control (C2) servers, thus rendering the C2 artifacts moot.

Threat behavior detection uses commonalities in adversary tools, techniques and procedures (TTPs) to create a comprehensive analytic capturing the observed threat. Defenders can then leverage the underlying behavior rather than individual IOCs to target variations of the observed TTPs, irrespective of changing IOCs. The behavior can be compared against known malicious activities. For instance, the specifics for activity group ALLANITE include targeting electric utilities using watering hole and phishing attacks leading to industrial control system reconnaissance. The group uses legitimate

Windows tools to conduct its activities. Indeed, this type of detection can detect seemingly legitimate behaviors as malicious. For example, an attacker using the VPN to access the network, creating and using new credentials, downloading a file on an engineering workstation, and then attempting to log into a PLC. While this approach is resilient against adversaries changing infrastructure and IOCs, it cannot be automated and is time-consuming to implement initially.

To defenders wondering about the best approach, there isn’t one. It’s entirely environment-dependent and goes back to your threat model. What is most important? If you want to discover new activity and figure out the full scope of an incident, configuration detection checks those boxes. For good transparency, flexibility and resilience to the changing threat landscape, use threat behavior detection.

However, it is a good idea not to rely on one method alone.

As the threat landscape evolves and defenders can access increasing amounts of data and knowledge about how adversaries operate, we will build better tools and procedures to detect hackers that don’t fall into these categories, or realize that some strategies don’t work. Threat detection is not static, and the future is open for new innovations and mechanisms to defend ourselves.

Selena Larson is an intel analyst for Dragos. As a member of the threat intelligence team, she works on reports for WorldView customers including technical, malware and advisory group analyses, and writes about infrastructure security on the company’s blog. She works to combat fear, uncertainty and doubt surrounding malicious activity targeting ICS environments and help people better understand complex concepts and behaviors. Previously, Larson was a technology reporter, most recently at CNN. She reported on privacy and security issues within the technology industry including ICS threats. In 2017, she was a fellow at the Loyola Law School Journalist Law School program, the only cybersecurity reporter to be selected that year. Larson lives in San Francisco. She writes short fictional stories speculating on our technological future and how things like robots, virtual reality and increasing connectivity impact our brains, relationships and human behavior.

Selena Larson is an intel analyst for Dragos. As a member of the threat intelligence team, she works on reports for WorldView customers including technical, malware and advisory group analyses, and writes about infrastructure security on the company’s blog. She works to combat fear, uncertainty and doubt surrounding malicious activity targeting ICS environments and help people better understand complex concepts and behaviors. Previously, Larson was a technology reporter, most recently at CNN. She reported on privacy and security issues within the technology industry including ICS threats. In 2017, she was a fellow at the Loyola Law School Journalist Law School program, the only cybersecurity reporter to be selected that year. Larson lives in San Francisco. She writes short fictional stories speculating on our technological future and how things like robots, virtual reality and increasing connectivity impact our brains, relationships and human behavior.