Outage Management and Distribution Management Systems are mission critical applications and they must be available, responsive, accurate, and reliable at all times. Proper quality assurance and testing are critical success factors for implementing these systems.

The modern grid is growing in sophistication and utilities face many challenges that dramatically increase the complexity of operating and managing the electric distribution system. Customers have increasingly high expectations for service reliability and electric power quality. Large amounts of data are made available by smart grid technologies. Utilization of distributed energy resources (DER) and Microgrids are increasingly common in operation of distribution networks. In response, Outage Management Systems (OMS) and Distribution Management Systems (DMS) are becoming more complex and robust. Advanced Distribution Management Systems (ADMS) are also emerging as a single integrated platform that supports the full suite of distribution management and optimization.

There is a great deal of activity on the OMS and DMS fronts. According to a recent report, global utility OMS spending is expected to total nearly $11.8 billion from 2014 to 20231 while global ADMS revenue is expected to grow from $681.1 million in 2015 to $3.3 billion in 2024.2 It is vital for utilities to understand and use good testing methodology to maximize the benefits from these investments. Strong methodology and execution can be the difference between a successful investment versus ongoing problems, decreased confidence, and additional money and time spent in support of these applications post go-live.

High demands are placed on these mission critical applications, not just during abnormal conditions (e.g., storms), but also under normal (non-storm) conditions. During severe storms, the OMS/DMS systems are critical for quick and cost effective restorations. A utility cannot afford sluggish performance, inaccurate / outdated information, or system failures. A different set of challenges exist during non-storm conditions. For example, this is the time for planned switching activities, where crew safety is of the utmost importance. The information must be timely and reliable, otherwise faulty switching, grounding, and tagging information could jeopardize repair crew safety.

Testing is key to achieving a properly functioning system that users are confident in. OMS/DMS system testing needs to be well planned and executed whether it is for a full implementation, an upgrade, adding or updating an integration, or installing a maintenance patch. Testing verifies whether:

- The system and environment are robust enough to stand up to stress from heavy load

- Information maps correctly to displays

- Correct data field mapping exists, connecting the data location in the source system (e.g., GIS) to where it is used in the OMS/DMS/ADMS.

- The data contained in the source system is correct (Bad data in the GIS will show erroneous information in the OMS/DMS/ADMS, even with proper mapping)

- Outages predict to reasonable devices at the proper speeds

- Appropriate warning and violation notifications are produced

- The general appearance of the model (i.e. electric, geographic, background) is acceptable

- The system fully supports the end-to-end business processes

- Application is responsive under high stress and heavy use

- All integration flows properly function and are responsive

- High availability / failover works as expected

- Advance applications complete and produce results within the expected time

Keeping the following Critical Success Factors (CSF) in mind will help utilities to develop and execute a well-planned testing methodology that helps prevent (or identify early) as many defects as possible. These CSFs are based on many years of experience testing OMS/DMS/ADMS and the lessons-learned. It attempts to strike a balance between excessive (wasteful) and insufficient (missed defects) testing.

CSF #1: Create Testable Requirements

Some projects treat requirements writing only as a design input and forget their importance to testing. Requirement identification/writing is where testing should begin. Requirements should be tightly coupled to testing (i.e. requirement based testing).

When defining requirements, use short, concise and testable statements. Avoid multi-sentence and run-on requirements. Requirement statements containing multiple thoughts should be separated into multiple requirements. A single action with a single result makes the best testable requirement.

When defining a requirement, ask yourself, "How can this be tested?" The reason being that the goal of testing is to verify the requirement. If the requirement isn't testable, then it probably shouldn't be called requirement and should be removed. Removal of untestable requirements is just as important as well-written testable requirements. Vagueness in requirements can lead you astray. Some examples of non-testable vague requirement statements are:

- The system shall group outages appropriately (too subjective)

- The system shall support all the users needed in a storm (exactly how many is all)

- The model in the viewer shall look presentable (too subjective)

Testable requirements have specific measures that can be verified, for example:

- The system shall group 3 transformer outages of the same phase to the upstream device

- The system shall support 50 concurrent users

- Devices in the model shall have and support all the attributes defined in the device attribute specifications document

OMS/DMS/ADMS are complex systems, so requirement flaws do occur. Examples of flaws include inexact, broadly written, or totally missing requirements. Low quality or missing requirements directly impact the project and result in excessive questions during test creation, important functionality going untested, and insufficient time for completing test execution. Insufficient requirements can lead to extended ad-hoc testing. Testers (especially end users) expect to verify whether functionalities work as expected/desired even in the absence of a specific test case for it. It is important to perform the necessary testing, even if it is ad-hoc. Poor or missing requirements can result in uncertainty, misinterpretation, rework, test case defects, and unplanned testing added late in the testing cycle. Ultimately, as a consequence of inadequate requirements, the project schedule slips.

The requirements creation process can help avoid or find defects early-on in the project. It can help identify product limitations, discover defects in the core system, and - by thoroughly working through the business processes - it can help identify or confirm what functionalities and information are required. The earlier a defect is found, the less expensive it is to resolve.

CSF #2: Freeze Requirements

Adding or changing requirements, especially in complex systems like OMS/DMS/ADMS, risks system stability and adds duration to testing and ultimately the project. These types of changes typically occur at the most inopportune time, when you are about to exit testing. Adding or changing requirements too late in the game results in having new tests to create/execute, invalidating/wasting earlier testing, and functionality that was tested before and passed now has defects. Those late changes often have been rushed into the system and have had more abbreviated unit and regression testing, which increases the risk of new defects.

Reality is that requirements do change throughout the lifecycle of a project. Good change management is the solution for this dilemma. Establish a requirement freeze and stick to it. If change comes in during testing, consider deferring the change and then plan it into a future release when the change can be made more safely.

CSF #3: Define the Rules

The testing methodology should be documented in a functional test plan. The plan defines the rules of the testing engagement including reviews, entrance criteria, test case development (including style sheet), test execution schedule, defect writing and triage and tracking, fix promotion, and exit criteria.

Before delving into the minutia of testing, let's define a few testing terms (Table A).

Table A - Testing Terminology Definitions

| Term | Definition |

| Test Case | The standard term to use in reference to the test. A test case contains a series of test steps. |

| Test Plan | A document created to plan out the test phase. It outlines the approach taken from the planning stage to execution and on through closure of the testing phase of the project. This is a detailed plan, not merely a project work breakdown. |

| Test Script | A short program or executable file used in automated testing. |

| Test Step | The simplest instruction of a test containing a description of the action to take and the action's expected result. |

Reviews

Identify what needs to be reviewed and by whom. A solid requirement review helps establish better frozen requirements. The review list could include: requirements, test cases, and defects. The list of reviewers could include business analysts, subject matter experts (SME), operators, project managers, and testers.

Entrance Criteria

Entrance criteria defines what needs to be done prior to starting the test execution phase. This list could include a reviewed and signed off test plan, reviewed and approved test cases, approved requirements, GIS/customer/estimated restoration data readiness, and hardware/software components to be installed on the target test environments.

Test Case Development

Test case development defines the step-by-step procedure for what to test and what the expected results of the test should be. Since this is the most time consuming part of testing, some definition and standardization is warranted here. Who's going to do the test execution and who's going to execute the tests influences test development time. If the consumer of your tests is more than just testing/management, such as test automation or training, test development will take longer (sometimes much longer). It's important to be clear on who's going to use your testing efforts.

Here are some tips to creating quicker, clearer test cases:

- Write test cases using the active voice. Avoid should', e.g. You should have seen an RO symbol Instead write: An RO symbol is displayed. The shorter and more succinct the better.

- Avoid using future tense. Avoid will be', e.g. do not say: You will see an RO symbol. At that point the tester already sees' an RO symbol. Instead write: An RO symbol appears on the Viewer.

- Use different test data for each test case. In an OMS/DMS/ ADMS you'll likely have several hundred test cases and multiple testers executing test cases at the same time. Having different test data minimizes/eliminates potential collisions with other testers. Mitigating the impacts of testers working in the same area avoids unnecessary bugs or the invalidating a test execution.

- Contain one entire function per step. This can include multiple sub-steps to accomplish a function. The idea here is accomplish one basic thing per test step. For example:

- In the Viewer, Press the Search Icon,

- Enter into the Device': xf_123456, then

- Press Search

- This could be written as three steps, but the goal is to load the map containing xf_123456 in the Viewer application. Since a step like this might be executed many times, it is less tedious to both the test writer and tester if it is written as a single step.

- Use a standard test case style sheet for designing test cases (see Appendix A). Standardized styles helps establish and enforce consistency.

- Test steps must have enough punctuation and spacing so that the intent of the step is clear, but avoid overly-strict adherence to every grammatical rule. Excessive attention to grammar rules extends test writing time when that time that could be better spent validating the software.

Test Execution Schedule

Test execution schedule defines what is to be tested, who is going to be doing the testing, and when.

Defect Writing and Triage

Defect writing and triage defines how a defect is written, defines defect severity levels, and how defects are processed. The goal is to resolve and close defects as quickly as possible. This goal fails if a defect is poorly written or the processing is too slow (and cumbersome).

Fix Promotion

Fix promotion defines the process of how fixes are promoted from the development system into test environments and then on to production. It is important for retesting and defect closure to know: 1) what results are expected, 2) when is it available, 3) how it gets deployed, and 4) in which environments to deploy it. Having this process defined builds confidence in the fix deployments and provides the defect list to assign for re-test.

Exit Criteria

Exit criteria define what needs to be accomplished to allow exiting the test execution phase. It simply answers the question "How do we know when we are done?" This includes the required pass rate of the test set (usually 100%), acceptable number for each defect severity level, and if/how exceptions or workarounds are to be documented and signed off.

CSF #4: Use Business and Non-business Teams Appropriately

Establish a team of diverse, motivated and collaborative testers. The testers need to bring different perspectives, experiences, and agendas. A pure tester will validate end cases and boundary conditions where operators bring real life process experience to look at factors not specifically written into the test case. Both kinds of team members are needed and they will both find defects. The critical objective is to find important defects as fast as possible.

CSF #5: Keep It Simple and Lean

There is a lot to test on an OMS/DMS/ADMS. There is a fine line between being thorough vs. over-processing.' Due to the criticality of outage and distribution management, it is important to fully test the system, but at the same time, it may not be necessary to formally test every exit button in every configuration of every tool. For certain functions, a sufficient sample of tests will fulfil the testing need.

Over-processing testing has multiple downsides. Some of these include more test cases to create and maintain, more calendar duration to execute them all, more results to track, mental exhaustion, and ultimately, increased cost to the project.

Maintenance patch testing also has the potential for over-processing. The system needs to keep current, yet change induces instability. Those maintenance patches often contain critical fixes to the system. Having those fixes could prevent future system down times encountered at inopportune times. The tester's job is to test these patches well enough to ensure system stability.

Within a test case, keep test steps as simple as possible. Some steps test basic core functionalities that will be performed thousands of times during a test cycle. In a test case if you can turn 5 low-level test steps into 1 appropriately sized step, then do it. The benefits include simplifying it for the tester to read and follow, avoiding mental fatigue, and allowing greater focus on the important aspects of the test.

CSF #6: Diversify your Testing

The depth of data combined with diverse functional paths, makes OMS/DMS/ADMS testing unique compared to other utility power applications. An operator can follow their usual path, to perform a function - such as opening a fuse - using menus, dropdowns and/or popup windows. This same function could also be performed using power keys. Testers need to be instructed not to do something the same way every time (i.e. menu selections vs. icon clicks, double clicking vs. selecting and clicking an OK button, right-click menus). It is important to test the system in various ways. Test coverage is increased by testing the various different paths to perform a function.

Model data is another area that needs diversity in testing. Different parts of the model may react differently than others. Some regions may have feeders with a unique device type or more dense population. Certain regions have older devices, sometimes with obsolete data or devices. Other regions will be newer and uncluttered. It is important that testing incorporates this data diversity. Data issues that exist are further compounded by the current trend of merging utility companies. While it's impractical, if not impossible, to test every data point, testing on several diverse areas will provide reasonable test coverage.

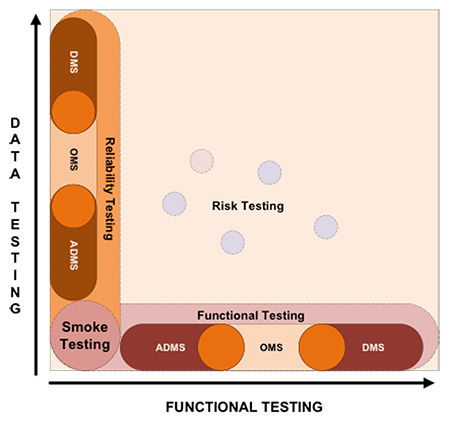

Figure 1 shows how diversity increases testing coverage. In OMS/DMS/ADMS, the data axis is multiplied by each component.

Figure 1 - Diversity and Test Coverage

CSF #7: Decide Where to Spend the Most Effort

When planning what to test, determine what needs the most attention. Your time is limited and there are many features to test, so take the time to determine where your efforts will be best spent. Recommended focus areas include:

- Overall system performance and stability

- Areas that would risk injury or death, should there be defects

- Features that must produce accurate results

- Features that must produce reliable results

- Features that are new to the product or your implementation

- Features that are unique to your implementation

- Features that have historically been problematic

- Highly configured features

When deciding where to place attention, consider new features that have not had time to mature (possible undiscovered design flaws) and functionalities that are not used by many (or any) other utilities and thus do not have the advantage of being tested during the software manufacturer's release testing.

Finally, performance is always worthy of significant attention. Under the stress of storms, the risk is that performance degrades or the system and/or interfaces crash. At one utility, insufficient performance testing led to such poor production performance that they discussed turning off their OMS during storms, taking away what this tool was designed to do; help resolve outages. Eliminating sluggishness and unreliability is critical to success. Performance related issues can be caused by software flaws, by data, misconfiguration, or the computing environment (i.e. inadequate hardware, database tuning, network issues). Make a plan that includes testing with the full electrical model, all interfaces, high numbers of users, high numbers of callers, and high levels of system activity. Be aware that every performance issue is not necessarily caused by a software bug. Other potential sources of performance issues include the network infrastructure, the database, and server operating system. If testing identifies performance issues, then it is not uncommon for any of these IT disciplines be used to diagnose the issue.

SUMMARY

Testing an OMS/DMS/ADMS presents a unique set of challenges. The requirements phase of such projects too frequently is lacking or omitted altogether in order to save time. Requirements are critical not only for identifying how the system should function, but also as the starting point for testing. Structuring requirements so that they're testable reduces defects and saves time. Controlling change to those requirements mitigates many risks, especially when change happens deep into testing. With great amounts of data and multiple paths to perform actions, diversity in the test cases and the test team are imperative. There is a limit to how much testing can be done, so decide on priorities and stick to them. Following a well-planned testing methodology results in protecting the value of the financial investment

APPENDIX A - TEST CASE STYLE SHEET

The following sample convention standardizes the common test language' within a test case, improving the test execution. This also provides consistent documentation and increases test clarity to the test executer. The convention is designed with HP QC/ALM testing tools in mind.

| Convention | Summary | Detailed Description |

| PreCondition | Pre-condition step | A special test step that documents conditions that must be met before executing the test case. |

| PostCondition | Post-condition step | A special test step that documents conditions that restore system to the original state upon completing the test case. |

| Tool Name | Tool Name | Tool name should start with a capital letter. |

| [sample] | Button | A word encased in [] brackets represents a button. E.g. [Cancel] |

| <sample> | Data to Enter | A word encased in <> less than/greater than represents data to enter into a field. E.g. <Don't let the person in>. |

| Sample | Web Link | A blue underlined word represents a web link. E.g. View all positions and access levels or Sharepoint.utilitycompany.com/testing_docs/data_file.doc |

| Magenta | Uncertainty | Any wording in magenta color means the author is uncertain about the statement to be revised later. |

| “Backspace” | Key on keyboard | A word encased in “” double quotes represents keys on the keyboard. E.g. “M”, “Backspace” |

| {sample} | Error message | A word encased in {} braces represents an error message. E.g. {Internal error} |

| CLICK | on button and link | The keyword CLICK represents an action to click on a button or link. |

| PRESS | on keyboard keys | The keyword PRESS represents an action to press on keyboard keys. |

| CHECK | Checkboxes and radio buttons | The keyword CHECK represents an action to select the checkboxes or radio buttons. |

| ENTER | Text in to a field | The keyword ENTER represents an action to enter text into a field. |

| SELECT | From a drop down list | The keyword SELECT represents an action to select an item from a drop down list. |

| Selection | Sub selection | A “|” (pipe) symbol | The separation character to indicate cascading menu selections. |

About the Authors

Don Hallgren is a Senior Integration Test and QA Engineer with UISOL, an Alstom Company. He has over 20 years of experience in manual and automated testing for utility projects and technology. Don specializes in in-depth testing of complex systems, and manages people, projects, and processes to ensure on-time delivery. He holds BS degrees in Computer Science and in Electronic Engineering Technology. Reach Don at dhallgren@uisol.com

Don Hallgren is a Senior Integration Test and QA Engineer with UISOL, an Alstom Company. He has over 20 years of experience in manual and automated testing for utility projects and technology. Don specializes in in-depth testing of complex systems, and manages people, projects, and processes to ensure on-time delivery. He holds BS degrees in Computer Science and in Electronic Engineering Technology. Reach Don at dhallgren@uisol.com

Ross Shaich is an OMS Consultant with UISOL, an Alstom Company. He has over 17 years of experience in OMS implementation and support of large-scale enterprise projects. He has served as subject matter expert, test lead, functional lead, test designer and project manager. He holds a Master's Degree in Project Management and is a certified PMP. Reach Ross at rshaich@uisol.com

Ross Shaich is an OMS Consultant with UISOL, an Alstom Company. He has over 17 years of experience in OMS implementation and support of large-scale enterprise projects. He has served as subject matter expert, test lead, functional lead, test designer and project manager. He holds a Master's Degree in Project Management and is a certified PMP. Reach Ross at rshaich@uisol.com

References

1 Outage Management Systems: Integration with Smart Grid IT Systems, IT/OT Convergence,

and Global Market Analysis and Forecasts. Navigant Research. March 2015.

2 Advanced Distribution Management Systems: Software Purchases and Upgrades, Integration Services,

Maintenance, and Analytics: Global Market Analysis and Forecasts. Navigant Research. March 2015.