In today’s world, data of all types are used to drive decisions within organizations. Those who ask the right questions, and have the right data to provide answers, often find themselves with a significant advantage over the competition. Solid data assets are essential to ensure you have the right support in answering these questions, whether we are talking about “big data,” “little data” or content of another kind. As part of their business strategies, many organizations seek to leverage content that has been previously created or managed by others. Third-party data acquisition can save significant time, and in many instances, expensive labor. Pitfalls however, do exist. I have managed data acquisition efforts related to geospatial content within my organization for nearly 10 years. Here are some of the best practices I have learned during that time in order to avoid common pitfalls that may be encountered when exploring third-part data acquisition.

1. Define Your Purpose

Before you ever consider any manner of data acquisition strategy, you must first define the question, or questions, you are trying to answer with the data you are looking to acquire. Without clearly establishing the purpose for which you are setting up a database, frustration ensues as you will often find your data lacking in either data wholeness or data architecture. By establishing the “why” behind your data request early on, you will be able to successfully answer many of the inevitable questions that will come up along the way. This exercise can often be one of the most difficult activities in preparing to move forward with your acquisition plans, and can often take more time that you initially expected. Beginning with a clear purpose is critical to the initial and ongoing success of your endeavor as it properly positions you for future success.

2. Seek Out Your Sources

Once you have established a firm set of questions, or purpose for which you are setting up your data architecture and structure, you will be in a positon to start seeking sources for your required content. There are many things to consider when seeking data inputs, and many of these factors will be unique to the requirements of your particular use. However, there are some common data quality and completeness items that are relatively universal and should be considered in any acquisition strategy. Early on, you’ll want to consider the longevity needed from the data you are seeking to acquire:

- Are you looking to support a brief project that will not require long-term data access, updates to the data and long-term consistency?

- Are you seeking to support a longer-term decision process – one that will last for many years? If so how important is consistency from one iteration to the next?

- If an existing provider, for whatever reason, is no longer able to provide content to you, will that be acceptable? What are the possible alternatives for securing another provider?

All of these issues must be carefully considered when exploring data sources.

3. Ensure Data Quality

Quality can be subjective and is directly related to the use-case behind your data needs. And as quality can be difficult to quantify, understanding the goals you are trying to achieve is critical in order to determine if the quality of the potential content is sufficient for your needs.

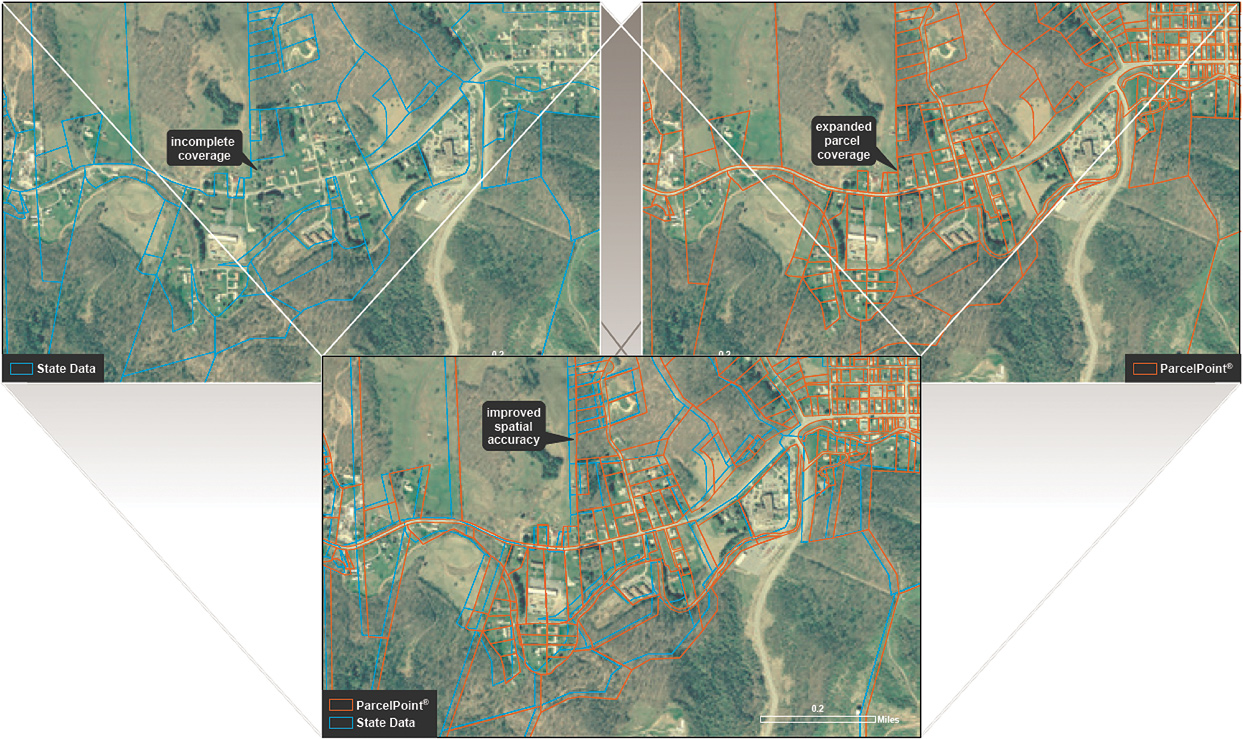

My experience with data acquisition has, for the most part, been characterized by the spatial variety or mapping type. When I speak of mapping I am referring to it in the cartographic sense of the word. One of the earliest questions CoreLogic® struggled with on the data acquisition journey was how to express the true quality of the data when it came to positional accuracy. When evaluating the positional accuracy of spatial data many will tell you that the comparison of the data to an aerial image is the best way to evaluate the quality. On the surface this makes a lot of sense, as our natural inclination is to believe what our eyes are telling us; after all, seeing is believing, right? However, as any photogrammetrist will tell you, not every aerial image can be trusted, since a picture, like a paper map, is attempting to represent a three-dimensional world in a two-dimensional space. True mapping grade aerial imagery must be processed though exacting standards to arrive at mapping quality orthophotography. Luckily, the Federal Geographic Data Committee has published a standard for spatial data accuracy which the firm utilized to arrive at a quantitative method for determining the true quality of the data we were acquiring. In some instances, we found it necessary to make significant improvements to the spatial quality of some of our source data. The application of this standard made it possible to apply quantitative acceptability standards for source content from more than 5,000 providers.

4. Ensure Data Completeness

When evaluating the completeness and quality of any type of data you are seeking, it is important to also consider the attributes that will be received as part of the dataset you are sourcing. Some datasets will contain few attributes and some will contain many. Based on the questions you want answered from your earlier determination, some attributes will be more or less important to your decision-making process. Once you understand what attributes are most important for your use, you can seek additional detail from any content provider. One important consideration is the evaluation of population percentages for each attribute type. This will help you to determine if the data that the content provider offers is sufficient for your business needs.

5. Metadata Can Lead to Better Data

An area that is often overlooked when evaluating a variety of content is the quality of the metadata supplied. Metadata is simply additional data about the data or, in some cases, more data. Quality metadata will provide great detail related to the content you are utilizing. Metadata contains such things as manner of data collection/creation, currency of the data, delivered content and contact information related to support for discovered issues, to name just a few. Comprehensive metadata is an indication that the provider takes data management seriously, and with that comes pride in their responsibility to provide you with quality data.

6. Source with Authority

Finally, seeking out an authoritative source for content is what I consider to be the gold standard of data acquisition. Authoritative data is data which is produced by an entity with the authority to do so, such as a local government. In many instances, though certainly not exclusively, this data is authored to support some other need. A good example of this type of data is property tax assessment data created and managed by local governments to manage property tax assessments. Much of my experience with authoritative data is related to data resulting from an existing legal or statutorily requirement to create the data for a particular purpose. The problem with acquisition of this type of data is that it is quite rare that distribution of this content is a core component of the entities’ overall mission – they have another purpose they are trying to support. This can make efforts to acquire this authoritative data time consuming and frustrating. Luckily, there is a fallback position. Some entities support internal business activities with content received from these authoritative sources. If properly vetted, these users can become a valuable source of quality content. This is especially true when you consider that these entities likely considered many of the same questions that you, as a data requestor, are asking.

As you can no doubt see, data acquisition is far more complicated than just picking up the phone or plugging a search term into your search engine. A comprehensive data acquisition strategy is important to ensure that time is not wasted. Establishment of strategy helps achieve goals, eliminate ambiguity and speed the time to success of the organization. When you are able to appropriately leverage strong data acquisition practices you can find the balance between what I like to call professional services and the DIY approach.

About CoreLogic

CoreLogic (NYSE: CLGX) is a leading property information, analytics and solutions provider. The company’s combined public, contributory and proprietary data sources include over 4.5 billion records spanning more than 50 years. The company helps clients identify and manage growth opportunities, improve performance and mitigate risk.

© 2016 CoreLogic, Inc. All rights reserved.

CORELOGIC and the CoreLogic logo are trademarks of CoreLogic, Inc. and/or its subsidiaries.

About the Author

Matt Karli is a senior product manager for CoreLogic Insurance and Spatial Solutions. In this role, Matt is responsible for the product management of the suite of location and jurisdictional geographic boundary products.

Matt Karli is a senior product manager for CoreLogic Insurance and Spatial Solutions. In this role, Matt is responsible for the product management of the suite of location and jurisdictional geographic boundary products.

Matt has more than 9 years of experience developing and launching high margin spatial products to the marketplace. He joined the CoreLogic team in 1998 as a Map Researcher for the Flood Services Department. He then transitioned to roles in both Operations and Data Acquisition before moving into his current Product Management role. At his time at CoreLogic Matt has overseen the formation and leadership of the team that created the ParcelPoint® product. He has experience in both product and project management, map interpretation, GIS, quality control, business process improvement, and operations management. Additionally, Matt is one of many patent holders at CoreLogic; he was part of a team that developed a product for unique spatial intelligence and patented that technology.

Matt earned a B.S. in Geography and Planning with a minor in Geology from Texas State University, where he also taught several undergraduate geology labs.