According to INSIGHTSQUARED’s infographic1 on data quality, poor data and lack of visibility into data quality is the No. 1 reason for project cost overruns, and the cost of ‘dirty’ data for US businesses exceeds $600 billion dollars annually.

The term ‘Big Data’ has a lot of buzz in the electric industry, but is this a primary issue for utilities? One basic definition of ‘Big Data’ is when the amount of data available or received is greater than one’s ability to process or manage it. IBM has described data in terms of the ‘four V’s2’ – volume, variety, velocity and veracity. Large data sets certainly fall into the volume category, but these other characteristics also present big data challenges.

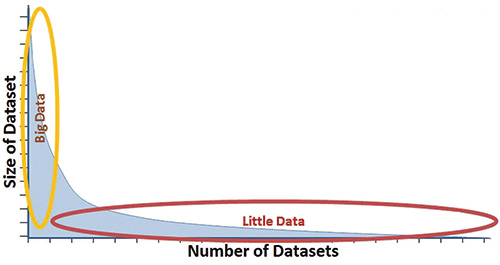

As EPRI enters its second year of transmission and distribution modernization demonstrations (TMD & DMD) on data analytics, its one-year update on findings3 identifies the general data analytics priorities of 14 utilities, and the volume of data is not the biggest challenge. Although a number of specific data analytics applications are being identified and prioritized, clearly a more significant challenge is what has been called ‘Little Data’ as shown in Error! Reference source not found. A simple definition of ‘Little Data’ is when the number of data sets is greater than one’s ability to integrate and process the associated data.

Figure 1: Little Data vs Big Data

A major element of the challenge is that common information may exist in separate datasets that represent the same asset, system, or grid model information, but these datasets are managed independently in their own silos. These silos in themselves can result in data inaccuracies or inconsistencies. Creating ways to integrate, improve, and maintain data quality across different systems can have a positive impact on the bottom line. Of the four Vs, both variety (different forms of data) and veracity (uncertainty of data) apply. The combination of opportunities and challenges caused by ‘Little Data’ indicates that data becomes an increasingly valuable asset when there is one accurate ‘source of truth.’

The Little Data Challenge

Electric utilities have the potential to gain access to more data from an increasing number of sensors and systems, both internal and external with new sources continuing to evolve. Grid operations are increasingly dependent on data that cuts across multiple business functional areas in which data representation is very diverse in character or content (i.e., heterogeneous). One basic example is meter data. Historically, meter data has been used exclusively for billing – the utility cash register. But as advanced metering systems have evolved to provide significantly more data such as voltage and other power quality information, the meter data has become a valuable asset to distribution operations. However, these customer and operations systems have never been integrated before.

This isn’t a new challenge. Dealing with numerous heterogeneous data sets has been an industry hurdle since grid data started to become digitized. In 1996, EPRI’s Guidelines for Control Center Application Program Interfaces4 identified this challenge. Standards were recognized as a solution, and EPRI worked to advance the industry through the development of what was then a series of new electric industry standards referred to as the Common Information Model (CIM) that includes International Electrotechnical Committee (IEC) standards 61970, 61968 and 62325. The CIM is intended to provide software applications and system models a platform-independent view of the power system with a standards-based information model identifying relationships and associations of the data within an electric utility enterprise.

Adoption of the CIM has increased but still faces challenges. An enterprise-wide view of the electric grid is complex due to the many heterogeneous data sources within a utility. Existing systems may have proprietary interfaces with significant lifespan and investments both in the technology and knowledge. Updating interfaces just to be standards compliant is a tough business case to justify.

A better strategy is to make interfaces standards-compliant when doing new acquisition or as part of a maintenance cycle. Another challenge may be that, when integrating proprietary systems into a CIM-based model, some information in the model may not exist and may need to be added to the standard. Unfortunately, virtually every CIM project requires the standard to be extended to be compatible with the unique needs of a given utility. Because the CIM is vendor neutral and designed to provide flexibility needed for integration, this may result in semantic ambiguities among different software vendor products, especially if the utility does not already have a meta-data management practice or a defined semantic model.

Approaches to Integration

Although many challenges exist related to ‘Little Data,’ significant progress is being made to enable innovation through Web standards like those led by the World Wide Web Consortium (W3C)5 and technical interoperability such as an Enterprise Service Bus (ESB) or Service Oriented Architecture (SOA). Semantic standards like the CIM are addressed by organizations such as the CIM Users Group (CIMug)6. They are well-supported by vendors, such as ABB, IBM, Oracle, Siemens and more, and continue to drive towards continuous improvement. The EPRI Common Information Model (CIM) 2012 Update7 and 2013 Update8 provide insights on implementations by several utilities. The number of respondents from the 2013 CIM survey nearly doubled, indicating increased interest and adoption in the CIM worldwide.

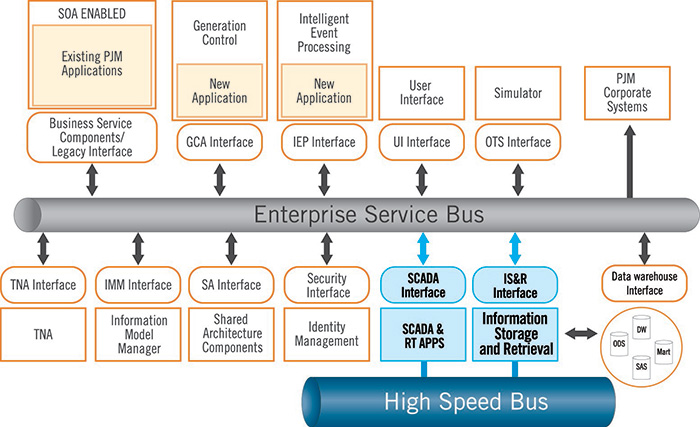

One approach to solving these challenges is an effort by PJM Interconnection9 as part of its Advanced Control Center (AC2) program.10 Commissioned on Nov. 8, 2011, AC2 features two fully functional control centers and data centers located at distant sites. Both sites are staffed 24/7 and simultaneously share responsibilities for operating the transmission system and PJM-administered wholesale electricity markets. Either site can run the RTO’s entire system independently should the other become inoperable.

Figure 2: PJM Enterprise Service Bus

(click to enlarge)

This breakthrough proves that innovative technology, such as a service-oriented architecture (SOA) and a CIM-based messaging architecture, can be adapted to real-time, high-performance, mission-critical environments leading to the evolution of next generation control systems. From the beginning of designing the new control systems to increase operational efficiency and grid reliability, PJM sought an integrated architecture with embedded security controls, scalability and flexibility. Those goals led PJM to a service-oriented architecture to interoperate with a new Shared Architecture platform, which was co-developed with Siemens, so PJM’s systems could grow easily as new members integrated into the RTO and could adapt to new technologies and invite innovation.

This open, modern architecture, built on an enterprise services bus (ESB), as shown in Figure 2, enables the rapid integration of traditional utility applications and emerging Smart Grid applications. It provides flexibility and choices that previously had been unavailable due to legacy control center application investments. Utilizing a Shared Architecture enabled PJM to deploy new Energy Management and Market Management applications while leveraging legacy applications that will be replaced consistent with planned technology life cycles, thereby avoiding unnecessary reinvestment and risk.

Summary

Adoption of mature technologies and improvement of semantic technologies is increasingly important as the opportunity to improve operational efficiency and grid reliability are enabled by the combination of heterogeneous data. Because the CIM covers such a broad landscape of the electric utility enterprise, collaborative efforts such as learning from others and participating in standards development activities are essential in prioritizing the most important aspects of such development. The results of these efforts will enable the greatest flexibility in the choice of solutions that support innovation to manage the uncertainties of emerging value propositions from the availability of new and combined data.

About the Authors

Matt Wakefield is Director of Information, Communication, and Cyber Security at the Electric Power Research Institute. He has over 25 years of experience in the electric industry and his responsibilities include furthering the development of a modernized grid through application of standards, communication technology, integration, and cyber security.

Matt Wakefield is Director of Information, Communication, and Cyber Security at the Electric Power Research Institute. He has over 25 years of experience in the electric industry and his responsibilities include furthering the development of a modernized grid through application of standards, communication technology, integration, and cyber security.

Thomas F. O’Brien is the Vice President – Information & Technology Services at PJM Interconnection and is responsible for all aspects of PJM’s information technology services activities, including integration and application services and infrastructure operations. Additionally, he has provided active leadership for the implementation of the Advanced Control Center program including oversight of creation of a new information and application architecture for PJM’s Energy Management and Market Management Systems.

Thomas F. O’Brien is the Vice President – Information & Technology Services at PJM Interconnection and is responsible for all aspects of PJM’s information technology services activities, including integration and application services and infrastructure operations. Additionally, he has provided active leadership for the implementation of the Advanced Control Center program including oversight of creation of a new information and application architecture for PJM’s Energy Management and Market Management Systems.

Mr. O’Brien is an energy professional with more than 25 years of broad experience in all aspects of the electric industry. Previously, Mr. O’Brien was employed by GPU Energy and FirstEnergy and participated in the deregulation activities and energy trading activities within the electric industry.

References

1 http://www.insightsquared.com/2012/01/7-facts-about-data-quality-infographic/

2 http://www.ibmbigdatahub.com/infographic/four-vs-big-data

3 http://www.epri.com/abstracts/Pages/ProductAbstract.aspx?Product Id=000000003002003997

4 http://my.epri.com/portal/server.pt?Abstract_id=TR-106324

5 http://www.w3.org/

6 http://cimug.ucaiug.org/default.aspx

7 http://www.epri.com/abstracts/Pages/ProductAbstract.aspx?Product Id=000000003002000406

8 http://www.epri.com/abstracts/Pages/ProductAbstract.aspx?Product Id=000000003002003034

9 http://www.pjm.com/about-pjm/who-we-are.aspx

10 http://w3.siemens.com/smartgrid/global/SiteCollectionDocuments/Projects/PJM_ CaseStudy.pdf