The amount of data available for use by distribution system operators has grown by orders of magnitude due to the rapid growth in the number of intelligent electronic devices (IEDs), sensors, and Advanced Metering Infrastructure (AMI) devices. Mechanisms are needed to create new value from this wealth of data without overburdening operations and engineers, especially during emergencies. Whether the data supports modeling, comes from external sources or is cultivated from real-time operations, effective results rely on creating actionable information from the data streams created by new automation technologies.

In information technology, ‘Big Data’ is a collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications.

Utilities are already dealing with Big Data. A simple example is a utility with 100,000 meters that is taking 15 minute reads. One year of history for a few data items per meter will be in the terabytes (TBs), not considering any other data.

1Gartner provides the following definition: ‘Big Data are high-volume, high-velocity, and/or high-variety information assets that require new forms of processing to enable enhanced decision making, insight discovery and process optimization.’

The Data Analytics Problem

Analyzing new data sources such as those from AMI meters, substation IEDs, and distribution sensors can generate information to help electric distribution utilities improve operations by improving efficiency, reliability, performance, and asset utilization. However, potentially enormous quantities of data can easily exceed capability to transmit, process, and use the information effectively (sometimes called the ‘data tsunami’). Similar data tsunamis have emerged in other industries, and new technologies have been developed to address ‘Big Data.’ Suitable mechanisms are needed to:

- Identify highest value data items from available data sources (filtering)

- Convert data to new forms of information (data analysis)

- Communicate insight to recommend action or to guide decision making, often using data visualization (visualization)

What is Analytics?

Analytics is the discovery and communication of meaningful patterns in data. Analytics can also be thought of as tools that identify unforeseen benefit and solutions from vast amounts of data. Analytics exhibit the following characteristics:

- Employ multiple and divergent data sources beyond the sampled raw data including weather, economic, operational conditions, census data and social media

- Techniques drawn from statistics, programming, historical experience, geospatial analysis and operations research

- Results are often presented in a visual and/or graphical manner

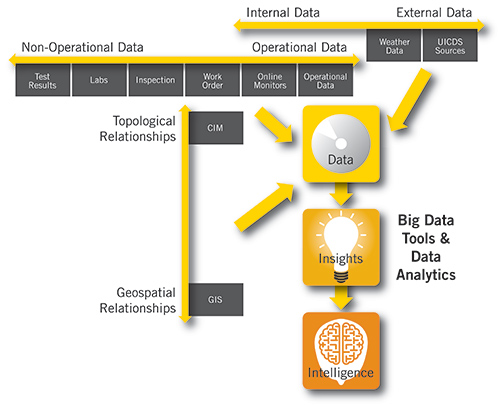

Figure 1 illustrates how utilities can use big data tools and analytics to discover new insights from complex data sources. As shown, multiple internal utility data sets (operational and non-operational), along with external data from outside agencies, can be combined with other large data sources that contain descriptions of complex relationship such as what can be found in Common Information Model (CIM) electrical connectivity data and Geographic Information System (GIS) spatial data, to create new insights and intelligence.

Figure 1: Creating Intelligence from a Myriad of Data Sources using Big Data Tools and Analytics

Applying big data tools and analytics provides the means for utilities to create new value from their smart grid and AMI platforms. Specific examples of grid analytics include:

a) Data analytics using AMI/MDM solutions

b) Outage management operational metrics

c) Emergency response data sharing

d) Device level asset intelligence

e) Optimizing distribution system performance through DMS applications

Big data and analytics are really more of a continuum and not a black and white concept. In other words, it is more of a road to take rather than something you are doing or not doing. In laying out a roadmap on how to get to big data and analytics, we recommend starting with basic analytics such as the AMI/MDM and outage management operational metrics before moving to big data analytics such as device level asset intelligence or cleaning up the engineering data used for fault location predictions.

It Starts With AMI Data

The AMI meter is not a meter, but a grid sensor providing energy, power and quality data at a frequency and latency to meet business needs. The Meter Data Management (MDM) system is responsible for ensuring the data’s accuracy and completeness. Data analytics can only achieve its value if the source data is correct and available.

The design and implementation of AMI/MDM requires that the solution is able to collect and process more than just the data required for billing, but data from all the meter points all the time. Equally important, but often overlooked, the solution must be able to distribute the data to the systems which can use the data with a similar high performance and reliability. And, finally, the solution must be considered a real-time collection of sensors for utility operations. Unfortunately, many AMI solutions are initially designed, implemented and operated without this forethought and narrowly focus on collecting billing reads on a monthly billing cycle. So, the first step in data analytics is to ensure that the data generator is able to deliver the accurate, complete and timely information necessary.

The AMI/MDM solution is not just a data generator. Utilities are already doing data analytics using the tools that are part of the AMI/ MDM solution to solve simple problems and provide meaningful results. Several examples of these analytic solutions are:

- Unauthorized Usage. Several utilities are analyzing the meter consumption where there is no customer contract to identify premises where the consumer has moved in, yet has not notified the utility to initiate service.

- Theft Detection. One utility compared the daily premise usage on a high usage day to identify premises using energy at a lower rate than similar premises to create a list of potential energy diversion cases.

- Service Problem Detection. Some utilities are identifying services with an excessive number of outages, or blinks, to identify loose connectors or vegetation issues.

- High Usage. Other utilities are comparing the consumption of individual customers against their historical consumption to provide a customer service by identifying potential service problems (e.g. water leak) or customers who may experience a high bill unexpectedly.

Although ‘big data analytics’ may appear to be a new concept, many utilities are already doing analytics using their AMI data and simple tools to extract value from their investment.

Outage Management Analytics are a Great Stepping Stone to Bigger Things

A good Outage Management System (OMS) is capable of consuming real time information from a variety of sources, analyzing it, prioritizing the work and providing its operators with a mechanism to respond to outages and perform efficient restoration. The OMS maintains a real time as-operated electrical model of the distribution network, tracking device operations in the field as well as the application of safety tags and temporary devices such as cuts and jumpers. As a result, outage systems are a rich source of data for both real time and historical distribution system analytics.

For real time processes, the analytics need to provide a comprehensive situational awareness enabling optimal management decisions based on operational priorities. The basic priorities are to: 1) address situations impacting public and employee safety first, next, 2) resolve issues that impact emergency management, medical and public good, and then 3) restore the largest number of customers as soon as possible by optimizing restoration taking into account system topology and geography, while 4) maintaining accurate records during the process for immediate use and after the restoration. There are a number of real time analytics which can support these goals, such as:

- Outage Type Counts By Region vs. Crew Skill Set and Regional Assignments. Comparison of the volume of work by region against the real time distribution of crews enables management to make informed decisions for more efficient distribution of crews to support restoration activities in different operating regions.

- Estimated Restoration Time Metrics. The trending of current estimated restoration times vs. actual restoration times and current outage durations allows quick identification of problems with aging outages before they occur. This can help improve customer satisfaction by reducing the possibility of providing inaccurate information to a customer.

- Hourly Restorations/New Outages. Analysis of outage trends by region and outage clearing device type can be used to determine if the restoration to new outage ratio is increasing or decreasing. This supports building processes for making informed decisions on calling in additional crews.

- Data Entry and Validation Checks. Often in the busy process of restoration, key pieces of information can be incorrectly entered. Analytics that generate a set of metrics for measuring performance can be used as a supplemental data validation method which helps ensure that data anomalies are caught and corrected early.

Historical analytics from an OMS – when combined with large amounts of data from other systems, such as an MDM – can be used to improve the accuracy of system restoration information. This analysis will support customer satisfaction efforts by identifying those customers who may be approaching a threshold for outage, can be used to replay utility responses to events, and to analyze for areas of improvement or proactively identify weakness in the system.

Outage management systems also provide valuable data that can be analyzed to improve asset utilization and drive preventive maintenance programs. When integrated with geospatial assets, maintenance management, AMI, and SCADA systems – the results of the data analytics become more valuable. This creates new opportunities to save money and track performance of system assets.

For example, device outage data can be combined with asset/ location data to look for patterns of device failure that lead to changes in maintenance priorities. There are other possibilities such as:

- Relating momentary outages to asset and network issues

- Auditing construction and maintenance activities that lead to outages

- Tracing outage history to vendor quality control deficiencies

- Understanding the impacts of outage causes on revenue, regulatory compliance, and customer satisfaction

Emergency Response Data Sharing

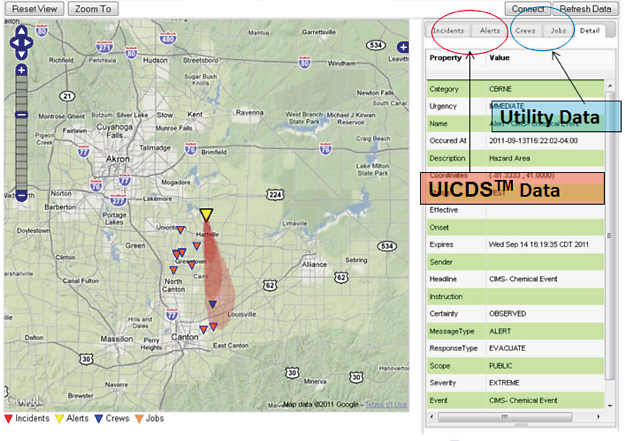

For emergency response, advances in interoperability, such as the Unified Incident Command and Decision Support (UICDS) system from the U.S. Department of Homeland Security enable new capabilities for the sharing of public/private data. This interoperability and the new data sources enabled by UICDS improve situational awareness and enrich the data analysis both for utilities and for public authorities.

For utility operations, the additional data provided to the OMS through UICDS sources external to the utility from police, fire, emergency response organizations, and other utility operational systems, improves situational assessment and restoration. Transportation infrastructure status, potential or real hazard locations, location of available medical, fire and other resources all assist the utility field operations. For emergency response authorities, the utility data helps put the responders where they are most needed, keeps first responders aware of utility field operations and safety risks such as downed power lines, and focuses the utility restoration where it is most needed for public safety and disaster recovery.

Data sharing is further supported with the integration of the utility/public authority command structures. UICDS provides services to coordinate and share Incident Command System (ICS) data and operations, enabling the utility and public authorities to operate under a unified command.

Coordination of the utility outage detection and restoration processes with public authority incident management improves decision making, leading to better response speed, effectiveness, efficiency, and safety in emergency response.

Figure 2 illustrates the application of shared data to enable an integrated multi-source data approach to emergency management. This approach becomes increasingly powerful as more agencies and responders contribute to the overall situation awareness.

Figure 2: Sharing information between Utility and Emergency Response Organizations

(click to enlarge)

Developing Asset ‘Intelligence’

Big data technologies are the perfect tools for implementing device level asset intelligence. Asset management practitioners seek answers to the following questions:

- What assets do I have and where are they?

- Are these assets performing according to expected performance (utilization, availability, life time) criteria?

- What is the present condition of the assets?

- What are the expected risks of non-compliance with respect to established standards?

- What optimal actions need to be taken to mitigate the identified risks?

Question 1 establishes the framework for the asset management space in terms of the physical nature, and location, of the assets. Questions 2 to 4 basically identify the right work to do and question 5 deals with doing the work right.

It is important to recognize that raw data can be found in various databases spread across the enterprise, and a common link enables the development of asset intelligence. A power transformer, for example, has related data in multiple databases: a Geospatial Information System (GIS) database to keep track of its location and positional attributes, an operations database that keeps track of electrical and non-electrical parameters and topology, a maintenance database that tracks maintenance information, an outage database that indirectly tracks performance, and a financials database that tracks associated costs.

Distribution transformer asset management is a good example of how big data and data warehouse concepts may be effectively combined to develop asset intelligence. Consider the following three metrics in the context of transformer sizing:

- Number of times the transformer has been overloaded during its service life.

- The ratio of total time the transformer was subject to overload to the total time in service.

- The ratio of cumulative amount of loading greater than the transformer rating to the cumulative amount of total loading for the transformer.

The metrics provide a good understanding of the utilization of the transformer over the period of analysis. In this case, the total power flow of the transformer is calculated as the aggregation of all the customers that are fed off this transformer. The actual value of the individual customer loads comes from a big data source – the MDM. The association of the individual customers to the transformer comes from the topological model, typically shared between systems using the CIM. The decision to upgrade the transformer may be based on statistical characteristics of these metrics, calculated for all transformers in the service area.

Other metrics similar to those defined above may be used to quantify aspects of asset health, asset reliability and asset risk and to study the impact of various “what-if” actions as optimal strategies for asset management are devised.

Answers to the above questions may be obtained by identifying the number of times the transformer is overloaded (power flow exceeds nameplate, or rated, values) and the total time duration (in its service life time) of the overload. In case of both these metrics, a higher value means that the asset is over utilized, and a candidate for pro-active action to remedy the situation.

Optimizing Performance: Distribution System Management and Automation

Grid modernization is transforming the distribution system operations from a mostly manual, paper-driven activity to an electronic computer-assisted decision making process. New sensors, meters, and other IEDs provide increasing levels of automation and can supply a wealth of new information to assist distribution operators, engineers, and managers. This enables them to make informed decisions about improving system efficiency, reliability, and asset utilization, without compromising safety and asset protection.

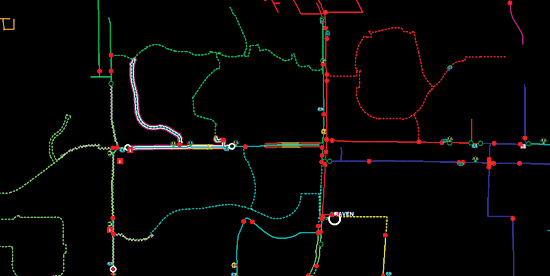

One key operating requirement that can benefit from improved data analytics is Fault Location. When a customer outage occurs, distribution operators must dispatch crews to the affected area to locate the root cause of the outage and take the necessary corrective actions. Existing fault location methods, such as grouping customer ‘lights out’ calls and ‘distance to fault’ information from protective relay, can provide approximate fault location predictions. This narrows the portion of the feeder to investigate, but still leaves a significant portion of the feeder to investigate, especially if the feeders are very long and have numerous branches. By combining these existing data sources with voltage from distributed sensors and AMI meters, inputs from available faulted circuit indicators, and an ‘as operated’ model of the distribution circuit, fault location predictions can be significantly improved. The result is a much more accurately predicted fault location that dispatchers can take action on. The benefit is a more productive field workforce and faster service restoration for the affected customers. Figure 3 shows the output of a Fault Location (FL) application after most of the feeder has been re-energized (all except the faulted sections).

Figure 3: Output of a FL application, where fault locations appear as “halos.”

Decision making at a growing number of electric distribution utilities is supported by a Distribution Management System (DMS) that includes advanced software applications for optimizing distribution system performance. Several key applications, such as Volt-VAR optimization, rely heavily on having an accurate model of the ‘as-operated’ state of the distribution network. Until recently, such models typically have questionable accuracy due to the lack of reliable measurements and errors in physical data extracted from the GIS. As a result, some utilities have been reluctant to place such applications in service, thereby missing an opportunity to optimize distribution system performance. Once again, data analytics may provide a solution to this problem. Data analytics that combine AMI load measurements with statistical load survey data can greatly improve the load models used by the DMS. Recently, researchers at the Electric Power Research Institute (EPRI) have shown that transformer phasing errors, a common modeling problem, and other modeling errors can be reliably detected by comparing AMI and substation voltage measurements. These analytics can greatly improve operator knowledge of the electrical state of the distribution system, thereby enabling the operators, engineers, and managers to take action to improve system performance without adding risk.

Many other examples for distribution feeder analytics exist, including detecting incipient faults and minimizing voltage regulator operations.

Summary

Analytics and big data tools are a new means available to utilities to discover new but useful patterns in multiple, large and diverse data sources. The recommended roadmap for getting to big data analytics starts with things that are not big data in the purest sense, such as AMI/ MDM and outage management operational metrics. Once these more basic analytics are well in hand, the utility is ready to move to big data analytics such as, for example, device level asset intelligence or improving the underlying data used for fault location predictions. The roadmap is supported by integration within the enterprise, as well as outside of the utility industry, and through improved standards such as CIM.

About the Authors

CJ Parisi is a Senior Consultant at UISOL. He has over 17 years’ experience implementing Outage Management Systems and developing Distribution System Business Intelligence. CJ is currently providing technical expertise for outage management implementations and data warehouse and analytics solutions for the distribution control center. Before joining UISOL, CJ worked for CES International (Currently Oracle Utilities), Obvient Strategies (currently ABB/Ventyx ) and Siemens Smart Grid Division. CJ can be reached at cparisi@uisol.com.

CJ Parisi is a Senior Consultant at UISOL. He has over 17 years’ experience implementing Outage Management Systems and developing Distribution System Business Intelligence. CJ is currently providing technical expertise for outage management implementations and data warehouse and analytics solutions for the distribution control center. Before joining UISOL, CJ worked for CES International (Currently Oracle Utilities), Obvient Strategies (currently ABB/Ventyx ) and Siemens Smart Grid Division. CJ can be reached at cparisi@uisol.com.

Dr. Siri Varadan is VP of Asset Management at UISOL. He specializes in delivering asset management solutions to electric power utilities, and has experience across the entire T&D wires business. He focuses on providing industry solutions involving big data and data analytics in the context of asset performance, health and risk management. Dr. Varadan is a senior IEEE member, a licensed professional engineer, and a member of the Institute of Asset Management. He speaks at IEEE-PES sponsored meetings regularly and may be reached at svaradan@uisol.com.

Dr. Siri Varadan is VP of Asset Management at UISOL. He specializes in delivering asset management solutions to electric power utilities, and has experience across the entire T&D wires business. He focuses on providing industry solutions involving big data and data analytics in the context of asset performance, health and risk management. Dr. Varadan is a senior IEEE member, a licensed professional engineer, and a member of the Institute of Asset Management. He speaks at IEEE-PES sponsored meetings regularly and may be reached at svaradan@uisol.com.

Mark Wald is a Director at UISOL. He has over 25 years of experience in electric utility operations, including EMS network applications, energy markets, distribution applications, and IEC CIM standards. Mark is a member of IEC TC57 WG16, and is supporting the extensions of the CIM energy market model for demand response. He applies business intelligence to electric power operations, and is leading application of new standards for integration of utility and emergency operations. Before joining UISOL, Mark was at ObjectFX, Oracle, and Siemens (Empros). Contact Mark at mwald@uisol.com.

Mark Wald is a Director at UISOL. He has over 25 years of experience in electric utility operations, including EMS network applications, energy markets, distribution applications, and IEC CIM standards. Mark is a member of IEC TC57 WG16, and is supporting the extensions of the CIM energy market model for demand response. He applies business intelligence to electric power operations, and is leading application of new standards for integration of utility and emergency operations. Before joining UISOL, Mark was at ObjectFX, Oracle, and Siemens (Empros). Contact Mark at mwald@uisol.com.

1 Douglas, Laney. “The Importance of ‘Big Data:’ A Definition. Gartner. http://www.gartner.com/resid=2057415