While in its early stages of deployment, it appears “smart grid” has gone mainstream.

Popular technology media have been talking about the proposed benefits and potential drawbacks of a national power grid that can “learn” and adapt to power-consumption behaviors for several years now, and the concept of “smart grid” has worked itself into one of the most-talked about topics in the high-tech space. In a sense, the concept has arrived, even though the technology itself is still evolving.

One of the negative side effects of this growing conversation, however, is that “smart grid” has become a fairly general term – it can be likened to using the word “communications.” In other words, it means a lot of different things to a lot of different people. For many people in the United States, smart grid is equated to Advanced Metering Infrastructure (AMI). While a smart meter can be part of the smart grid, it’s important to realize that it’s the endpoint – the last part of the grid. But a lot can happen between the actual generating point and the end. Smart endpoint does not equal smart grid.

So where do the actual “smarts” behind the grid come from?

The ability to find, process and act on information is needed in order for something (or someone) to be considered smart. While the smart meter plays a critical part of the grid by helping utilities and consumers analyze their energy usage, there are plenty of other players in this equation who need certain bits of information to ensure the grid stays reliable in the first place. In the world of transmission and distribution, plant substation managers also need smarter ways to glean and analyze relevant information they can use to keep their assets running smoothly. This is one area that has seen far slower advancement compared to the world of AMI technology such as metering.

Many utilities, for instance, still struggle with finding ways to prove the information they do gather from assets such as transformers is good information. Only recently have some (but not all) companies solved this problem and no longer have to question whether the information they receive from alarm monitors is correct and actionable. Still, there is ample room for growth in monitoring, how monitoring can be implemented and the information that can be derived from it.

While the smart grid may mean different things to different people, this much is true: all the players involved need to get smarter about accomplishing the same thing. In this case, that means digging deeper for data and decoding what that data means to stabilize the power grid infrastructure.

And that’s going to take a lot more than a meter.

A Closer Look at DGA Monitoring

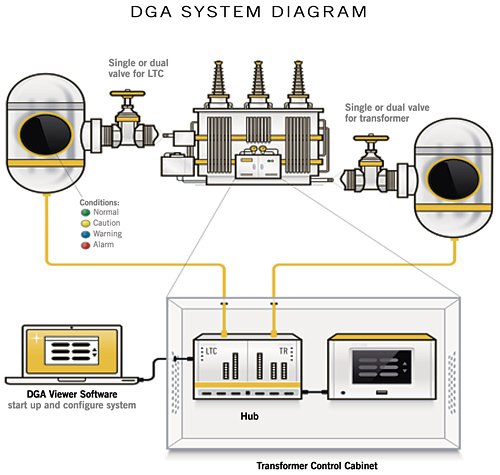

SmartDGA – Newer, more flexible DGA systems are giving facilities the ability to combat a variety of issues and improve condition-based monitoring programs for utilities.

Most professionals who are tasked with managing transmission and distribution infrastructure already subscribe to the notion that grid infrastructure modernization is the most important step to achieving a smarter grid. This is due to the simple truth that many critical assets in the national power grid (e.g. underground cables and transformers) are near their originally intended useful lives, and will only be pushed harder as demand continues to grow. This is a perfect opportunity to apply the smart grid concept to extend asset life.

To be fair, this isn’t a problem that can be solved overnight, but there are specific monitoring fixes that can and should be made to improve condition-based monitoring programs for utilities. One of these specific areas is dissolved gas analysis (DGA), which remains one of the most important tools utilities have in monitoring transformer performance. The oil samples undoubtedly provide important information, and most companies have some sort of DGA program to track transformer health.

A recent targeted survey of approximately 50 electric utilities and transformer manufacturers conducted by LumaSense Technologies, for instance, showed that more than half of the respondents (66 percent) considered online DGA to be “very important” in conditioning monitoring programs, with transformer DGA being the most important item monitored. The same survey, though, also validates that oil sampling is happening less and less frequently, with most respondents saying they sampled oil for lab analysis either once every six months or once a year. That’s a far cry from the way things used to be when utilities had more resources and could sample much more often. And considering the increased demand on today’s transformers coupled with their age, less frequent monitoring is unequivocally the wrong approach because the probability of an incident occurring between samples is rising.

The other drawback is higher costs resulting from the manually intensive process. All of these hurdles (in addition to monetary issues) contribute to the infrequent sampling rates that threaten to hamper infrastructure reliability. The seemingly obvious answer is automated technology, or online DGA monitoring. Statistics show, however, that less than 5 percent of transformers have a form of online DGA monitoring.

While this red flag may scream for the need to implement more online solutions, several issues remain that must be addressed before the industry sees an increased adoption rate.

Installation, Operations and Maintenance

In layman’s terms, the harder the installation, the higher the reluctance. Installation times for online DGA systems range from half a day to five days. The contributing factors depend on whether separate stands for the instruments, additional oil lines to and from the transformer or load-tap changer (LTC), complex wiring and additional supports are needed. Another challenge is outage scheduling because many utilities do not allow installations to happen while transformers are in service.

Then there’s the issue of where the installations take place. Transformers, by necessity, are placed in some of the least environmentally friendly conditions, which means DGA instrumentation must endure harsh conditions. But they don’t always succeed – instrumentation has been known to freeze in cold weather, dry out in hot weather, and short out in wet weather, etc. This also contributes to higher costs because packaging designed to guard against these conditions are not inexpensive.

Additionally, due to these locations, transformers and LTCs are typically not visited frequently, which means it may be months between checks on the equipment. Oil leaks on monitoring systems can result in devastating circumstances for a transformer or LTC. The connections between a DGA instrument and the transformer or LTC mean longer plumbing runs to and from the instrument. Translation: there is a greater probability of oil leakage.

So what’s the answer here? Generally speaking, DGA monitors have traditionally been too expensive, invasive, oversized, hard to install and required close monitoring. All of these factors drive costs up. The utility industry isn’t the first, however, to encounter such problems with technology. In the medical field, for instance, pacemakers once had the same criticisms. Modular systems are a potential solution to this problem because they are generally less expensive, easier to install, less invasive, and can be more reliable. This is the type of solution that can help achieve widespread use and enhanced grid reliability, and continues to improve from year to year.

Data Acquisition and Analysis

The biggest problem facing the industry right now is the inability to take data and convert it into useful information. If companies don’t have a way to turn that data into actionable insights, it’s as good as useless. Older DGA instrumentation merely provided results and numbers, with no guidance except for an alarm when a certain set point was exceeded (set points, by the way, that were determined by the utility). Given the amount of manual data analysis required to extract useful information from the data, the next wave of instrumentation can and should help them interpret this data. Realistic expectations include guidance for understanding readings and comparisons to standards.

But this also brings us back to one of the original points – how can an operator know he or she is viewing data that can be trusted? As in most conservative industries, companies tend to rely on the tried-and-true systems that have been in use for decades. New technologies are at a clear disadvantage because they are unknown, and substation managers aren’t willing to take the risk – not with hundreds of thousands of potentially unhappy customers waiting in the wings. An alternative approach to evaluating new technologies, however, is exploring relevant sensing technologies that are well known and established in other industries. For example, non-dispersive infrared (NDIR) technology has been proven for decades in industries such as automotive and medical devices that have to carefully track emissions. The same technologies can be applied in load tap changers, and can be even more appealing if industry accepted analytics (Duval’s Triangle, Rogers Ratio, Key Gases, etc.) are incorporated directly into the instruments to boost data reliability.

Another step in the right direction is an industry-wide push for standardization. The ability to communicate in a standard format (DNP3.0, IEC61850, Modbus, etc.) is of paramount importance, but the good news is that most DGA instrument providers are already there, and if not, are quickly moving in this direction.

Price

While the above-mentioned technical features are compelling, the biggest pain point may still be initial cost. Online DGA monitors can cost anywhere from $10,000 to $60,000 and require a detailed business case. Many utilities can’t afford to sacrifice functionality. Ironically, they can’t afford the prices for the feature-rich systems for their transformer fleets either. Spending $50,000 for an instrument for one transformer, after all, is difficult to justify even when protecting a multi-million dollar asset.

Simply put, costs must come down. Instrumentation installations are often evaluated as a percent of costs of the transformer or LTC. If instrumentation prices come down, instruments are a lower percentage of costs and thus can be deployed on smaller assets and become more widespread.

Another important factor here to consider beyond the price being paid for instrumentation is the total cost of ownership (TCO). TCO refers to initial installation costs, and maintenance costs throughout the life of the asset. When TCO is considered, utilities take into account the full cost of owning the monitor throughout the life of the transformer and can make a better-educated decision.

Finding Our Smarts

Of course, DGA monitoring is only one solution that can have an impact in improving grid infrastructure health, but it’s a highly important one, and a very significant impact.

The smart grid in general feels quite promising because it allows technology to adapt to market conditions and consumer habits. In much the same way, infrastructure technology also needs to help utilities and substation operators analyze and adapt to the way assets are functioning. Reductions in maintenance, complexity and cost can help pave the way lined with actionable information.

While smart metering may initially be the most visible aspect of the smart grid currently, smart monitoring of the entire infrastructure is required to produce a grid that meets the expectations of stakeholders at large. The meter is only one aspect of the overall infrastructure picture. Making the grid truly smart will require a holistic approach that begins with a focus on meters, but also incorporates a concurrent concentration on transmission and distribution through to generation.

About the Authors

Zbigniew Banach is the Transformer Monitoring Equipment Specialist for ComEd. He determines equipment monitoring needs for substation equipment; develops and maintains guidelines for equipment monitoring; and performs economic analysis of monitoring equipment.

Zbigniew Banach is the Transformer Monitoring Equipment Specialist for ComEd. He determines equipment monitoring needs for substation equipment; develops and maintains guidelines for equipment monitoring; and performs economic analysis of monitoring equipment.

Prior to his current position, he was the Superintendent of Technical and Maintenance training programs for Exelon Nuclear. His responsibilities included Accreditation of Nuclear Training, technical qualification for Exelon’s Nuclear power plant employees, and management of training programs. His technical background includes plant instrumentation systems, nuclear reactor protection & control systems, and substation transformer monitoring. He has over 35 years of experience in the utility industry.

Zbigniew earned a B.S. degree in Electrical Engineering Technology from Purdue University and completed training in the Kellogg Executive Programs at Northwestern University.

Brett Sargent is Vice President/General Manager, Products at LumaSense Technologies, Inc. He has over 20 years of experience working in various leadership roles in engineering, supply chain, manufacturing, quality, marketing and global sales with DuPont, Lockheed Martin, Exelon Nuclear, and General Electric, where he was Global Sales Leader for GE Energy T&D Products division. Previously, Brett was Vice President of Global Sales for LumaSense for five years. Brett holds a BS in Electrical Engineering from Widener University, MS in Nuclear Engineering from Rensselaer Polytechnic Institute, and MBA from Georgia State University.

Brett Sargent is Vice President/General Manager, Products at LumaSense Technologies, Inc. He has over 20 years of experience working in various leadership roles in engineering, supply chain, manufacturing, quality, marketing and global sales with DuPont, Lockheed Martin, Exelon Nuclear, and General Electric, where he was Global Sales Leader for GE Energy T&D Products division. Previously, Brett was Vice President of Global Sales for LumaSense for five years. Brett holds a BS in Electrical Engineering from Widener University, MS in Nuclear Engineering from Rensselaer Polytechnic Institute, and MBA from Georgia State University.