Overview

There are a number of choices to consider when connecting sensors in the field to a network. Different networking technologies offer varying amounts of speed, throughput, range, resiliency, privacy and security. Additionally, the utility will need to consider how to pay for the data connection, whether it will be an upfront capital expense, a lower upfront cost with added maintenance, or a service. A utility needs to consider all of these factors when selecting the network technology for their sensor network. This article looks at these factors to consider the pros and cons of each choice of network type.

Given the relative novelty of Internet of Things (IoT) applications, there is not one solution that can fit all needs, instead, technologies for network communications have been borrowed from other applications, resulting in a multitude of options with their own pros and cons. Therefore, selecting the right communication network is a trade-off exercise between technical and economical considerations, for each specific case.

Technical Considerations

The latest advances in technology and reduction in price of sensing devices have catapulted their use for many applications. Miniaturization, and their low power consumption allow their deployment at high scale, creating a stream of aggregated information that, once processed, can provide a complete picture of a whole site, not just the condition of a few assets.

Communication Technology

Traditionally, sensors in a substation are connected to a Remote Terminal Unit (RTU), that consolidates all signals and sends the data back to a SCADA system. The IEC 61850 standard requires that communication between the devices be wired, so the preferred choice of media to communicate sensors with an RTU has been wired media (i.e., UTP, coaxial, fiber, etc.). For sensors used to gather critical information for substation automation, this is still the best approach given the maturity and robustness of wired technologies. While using wired media works well when only a few sensors are used, it is impractical and incredibly costly for applications that involve the connection of tens to hundreds of sensors in the site1.

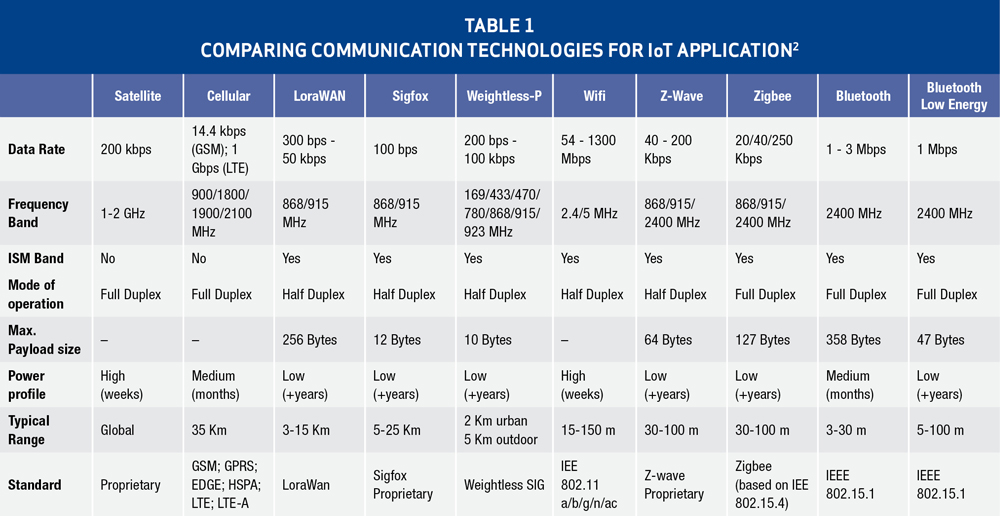

The previous arguments point to the fact that to fully unlock the potential of IoT; we must go beyond wired communication and consider the options available for wireless communication. Luckily, this is not a black-or-white decision, as a combination of both technologies can still be used. This way, the requirements of the IEC 61850 standard are met for those sensors that are highly critical, while deploying the sensors needed to monitor the whole site remains practical and within budget. The use of wireless communication adds more complexity to the design of an IoT system, as one must now decide which of the many available technologies is the proper one for a specific application. Table 1 summarizes the technical characteristics of the most common technologies used for IoT wireless networks2.

Table 1: Comparing communication technologies for Iot application2

(click to enlarge)

As Table 1 shows, each technology has different characteristics in terms of data rate, frequency, payload size, power consumption and range, so a good understanding of the application is fundamental to select the proper one. As an example, sensors reading physical measurements like voltage, current, temperature and humidity do not generate large amounts of data individually, (only a few bytes per measurement), and they are usually deployed in high quantities at a site, powered from batteries or solar panels.

Therefore, characteristics like data rate or payload size would not be as important as power consumption or range to determine the right communication technology for the application. If we were to deploy sensors like these in a distribution substation, wireless communication technologies like Z-wave, 802.15.4 (Zigbee) or even Bluetooth Low Energy (BLE) would fit the requirements. However, if we were to deploy these sensors on towers along transmission lines, technologies like LoRaWAN or Sigfox would be more appropriate.

Another example would be the use of video or thermal imaging sensors. This type of sensor generates much more data than the previously considered sensors (approx. 500Kbps for video with VGA resolution), and the packet size of this data is much higher (>1KB). At the same time, the number of this type of sensor deployed in a site rarely goes above a 10 and are usually located on the perimeter of the switchyard, making it easier to power them from conventional sources. Therefore, characteristics like data rate and payload are extremely important, while low power consumption and range might go to a second level. In this case, using WiFi would be the proper choice of wireless communication, if the sensors are deployed in a substation, while cellular would be better for installations that require long range.

There is no rule establishing a limit on the different technologies that can be used at a site. In fact, many applications would require a combination of technologies (e.g., PLC (wired), Zigbee and WiFi), as the requirements of the deployed sensors often diverge. However, it is important to keep in mind that the complexity of the system increases considerably when this is done because new devices are needed to consolidate the signals from sensors using different technologies. In this case, a trade-off between complexity and costs must be done.

Data Storage and Processing

The potential of IoT relies on the data it gathers, so the capacity to analyze that data and/or store it for future use is important. The storage and processing can be done at the edge, on a local server, or using cloud-based services. Again, the right choice depends on the goal the system must achieve.

The aggregation of all data captured by sensors, also known as Big Data, is used to find patterns that can predict behaviours. These predictions provide operation and substation managers with valuable insights that lead to cost reductions in maintenance and increase in asset performance. However, the computation required to find those patterns in the aggregated data, using machine learning algorithms, typically lean on powerful processing units that are costly for an electric utility to acquire and maintain.

The level and complexity of the computations, and hence, the processing power needed, increases if artificial intelligence tools are employed to optimize the use of resources and assets, based on the patterns obtained from the data. If the goal of a utility is to implement a system that uses machine learning and /or artificial intelligence tools, then using a cloud-based service that provides storage and processing power would be the best option. The use of such cloud-base systems creates a path for easier and faster scalability in a sustainable way, as it would allow a utility to increase storage capacity and processing power as needed, without having to add more hardware. It would also allow the upgrade of existing systems without replacing hardware, shutting down the system or disturbing operations. Another key benefit is that cloud platforms already provide access to powerful machine learning and artificial intelligence tools, making the development and implementation of the system much easier.

Some utilities believe that cloud computing is not as secure as hosting applications on their own servers. The concern seems legitimate when considering the data that controls the management of critical assets are hosted on platforms that are not totally being managed by the utility. Similar concern exists for the hosting of customer billing information being hosted on a cloud network. Utilities are beginning to accept that advancements in technology can make it more cost-effective to rely on security measures implemented in the cloud than to implement those measures on their own.

Not all applications for the data from sensors involve pattern recognition and behaviour modeling. Useful insights can also be obtained from basic trending analysis using local servers. Consider, for example, sensors measuring the temperature on the bushings of a transformer. They each should read a similar temperature, as the bushings are exposed to the same load. If the readings of one sensor start to diverge from the others, an operator can conclude that something might be wrong with that bushing, and a basic regression using the historical temperature values from the sensor, adjusting for differences in load and ambient temperature, could help forecast the time when the issue would become critical.

When local servers are used, it is important to consider that although the data generated by one sensor is not much, the aggregation of the data from many sensors can generate a high amount of traffic, impacting the performance of the network between sensors and server. Therefore, the network should be designed or upgraded to support the new traffic. For the same reason, the servers used for this type of implementation must have enough throughput capability to accept the incoming traffic without losing information, and enough processing power to manage that amount of information.

For cases where the network can’t be adjusted to the new traffic, there is the option of moving the data storage and analysis process to a device located at the remote site (e.g., substation), which is considered the edge. The main advantages of this configuration are related to the network: there is no big stream of traffic going through the utility network that would degrade its performance, and the impact on the IoT system of network problems is minimized or even eliminated. The main disadvantage is that the cost of the system is higher due to the need for local processing devices.

An important consideration when implementing an IoT system with storage and processing on the edge is that the devices used at the site must be hardened enough to function reliably under the environmental and electrical conditions at the site. The risk of doing otherwise is that the output of the system turns out to be unreliable and therefore, useless. The costs of maintaining or replacing failing devices can also become a burden for the utility’s budget in the long term. For application in substations, the recommendation is that any edge processing and storage device should meet the standards IEC61850-3 and IEEE1613, support a wide temperature range (-40ºC to +85ºC), and with no moving parts.

Power Source

New technology is making a compelling reason to power sensors by battery power. Sensors can be programmed to sleep most of the time and awaken only to take a reading and transmit the data upstream. Furthermore, since it is the wireless transmission that takes the most power, the data can be gathered periodically, stored in the sensor and transmitted upstream less frequently (i.e., a sensor may gather temperature data hourly and transmit upstream only once per day). The frequency of readings and transmissions and the size of the data all have an obvious effect on the life of the battery. The current battery technology makes them more compact and able to hold a charge for long periods of time. It is possible for a sensor to last up to 10 years on its own battery power if it is programmed optimally. A hybrid approach is also possible where the battery may be recharged by a small solar panel or harvested from an existing power line using a current transformer. When considering battery power vs. wired power, the utility will also have to decide between the initial cost of installing power supplies and cables vs. replacement of sensors/batteries at the end of their life.

Economical Considerations

As with any business decision, the implementation of an IoT system is subjected to having an attractive return on the investment. So, the choice of components and technology for the system must take into account not only the financial cost but also the resources it will require from the company to operate in the long term.

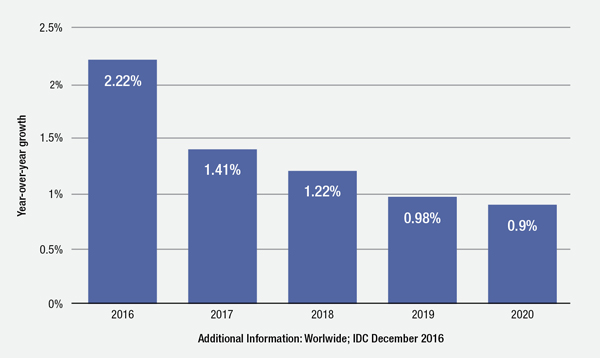

Figure 1. Forecast growth of worldwide telecom services spending from 2014 to 2020 (IDC, 2016)

Public vs. Private Networks

The network used to transmit data from the sensors or gateways, to a centralized server for storage or analysis, can be owned or outsourced. This situation applies mostly to the link to a remote site, as the local area network (LAN) at a site it is usually owned by the utility.

Until a few years ago, many industries, including utilities, relied on their own resources to ensure proper communication to their remote sites. Some of them created their own fiber optic networks and radio frequency networks,

that later turned into new business opportunities.(3,4,5) However, from the forecasted growth on telecommunications services spending worldwide from 2014 to 2020 (shown in Figure 1)6, we can deduce that the telecommunication industry is already mature, and its market is reaching a plateau.

Telecommunication companies (telecoms) had already gone through enormous capital expenses to create their infrastructure, and their core business specializes in operating and maintaining that infrastructure. Currently, almost every telecom has products specialized for customers that need highly reliable and secured services, like electrical utilities. Therefore, unless an electrical utility is already running a telecom business levering its existing network, or there is no telecommunication service in an area, there is not any strategic reason for a utility to deploy a new telecommunication network on its own. The capital investment and the operation expenses will be high, and the operations will not be as efficient as the ones of a telecom company.

Cloud Storage and Processing

The choice for utilities to use public or private networks can be driven by their choice to use cloud computing or private server-based computing. Utilities have lagged other industries in the adoption of cloud computing for at least a couple of reasons:

- Privacy and security – utilities have had a view that cloud computing is not as secure as hosting applications on their own servers. The concern seems legitimate when considering the data that controls the management of critical assets are hosted on platforms that are not totally being managed by the utility. Similar concern exists for the hosting of customer billing information being hosted on a cloud network. Utilities are beginning to accept that advancements in technology can make it more cost effective to rely on security measures implemented in the cloud than to implement those measure on their own.

- Capital expenditure – investor-owned utilities have preferred owning their IT assets for the fixed rate of return that they provide.7 To help encourage utilities to adopt cloud-based computing, regulators are considering ways that would allow utilities to pay for the service as a capital expense.8

As with telecoms, cloud services companies are better positioned to invest in new technologies and securities because of the economies of scale that they possess. It makes it easier for utilities to seamlessly adopt the latest technologies and scale up on services when it is required. The change is already happening at a fast pace; the OpenFog Consortium estimates that, based on current data, the compounded growth on market size for cloud computing utilization by electrical utilities will be close to 120 percent annually between 2018 and 2022.(9)

Conclusion

Utilities can benefit enormously from new technologies like IoT and cloud-based platforms. Currently, there are several technologies available that provide the reliability and security required for critical infrastructure applications. However, the implementation of such technologies can be very difficult for many electrical utilities because it requires skills and resources that are out of their scope. To overcome this problem, utilities could use the service of companies that specialize in telecommunication and cloud platforms. This would limit the control a utility has over such assets, but it would guarantee the maximization of the benefits and the lower cost.

Richard Harada has more than 20 years of experience in industrial networking communications and applications. Prior to joining Systems with Intelligence, Harada worked at RuggedCom and Siemens Canada, where he focused on product management and business development for industrial communications in the electric power market. Harada is an electronic engineering technologist and has a Bachelor of Science degree in computer science from York University in Toronto.

Richard Harada has more than 20 years of experience in industrial networking communications and applications. Prior to joining Systems with Intelligence, Harada worked at RuggedCom and Siemens Canada, where he focused on product management and business development for industrial communications in the electric power market. Harada is an electronic engineering technologist and has a Bachelor of Science degree in computer science from York University in Toronto.

Edgar Sotter has doctorate degree in electronic engineering from Universidad Rovira I Virgili in Spain and a Bachelor of Science in electrical engineering from Universidad del Norte in Colombia. Sotter’s fields of expertise are in sensing and monitoring systems and computer networks. Sotter has past experience working at Siemens/RuggedCom, and he is currently the director of product strategies & client solutions at Systems With Intelligence.

Edgar Sotter has doctorate degree in electronic engineering from Universidad Rovira I Virgili in Spain and a Bachelor of Science in electrical engineering from Universidad del Norte in Colombia. Sotter’s fields of expertise are in sensing and monitoring systems and computer networks. Sotter has past experience working at Siemens/RuggedCom, and he is currently the director of product strategies & client solutions at Systems With Intelligence.

References:

1. A. Mahmood, N. Javaid and S. Razzaq, "A review of wireless communications for smart grid," Renewable and Sustainable Energy Reviews, pp. 248-260, 2015.

2. F. Vannieuwenborg, S. Verbrugge and C. Didier, "Choosing IoT-connectivity? A guiding methodology based on functional characteristics and economic considerations," Transactions on Emerging Telecommunications Technologies, vol. 29, p. 3308, 2018.

3. The Elastic Network (ECI), "www.engerati.com," 07 June 2018. [Online]. Available: https://www.engerati.com/system/files/from_utility_to_utelco_business_models_for_profitability.pdf. [Accessed 14 08 2018].

4. D. Talbot and M. Paz-Canales, "Smart Grid Paybacks: The Chattanooga Example," 02 2017. [Online]. Available: https://dash.harvard.edu/bitstream/handle/1/30201056/2017-02-06_chatanooga.pdf?sequence=1. [Accessed 14 08 2018].

5. L. A. Reyes Jr., "Kit Carson Electric Cooperative, Inc. Fiber to the Home (FTTH) Boradband Project Update," 22 08 2016. [Online]. Available: https://www.nmlegis.gov/handouts/STTC%20082216%20Item%201%20Kit%20Carson%20Electric%20Cooperative.pdf. [Accessed 14 08 2018].

6. IDC, "Forecast growth worldwide telecom services spending from 2014 to 2020," 12 2016. [Online]. Available: https://www-statista-com.myaccess.library.utoronto.ca/statistics/323006/worldwide-telecom-services-spending-growth-forecast/. [Accessed 14 08 2018].

7. L. Whitten, "Cloud Computing 101: Need-to-know info for electric utilities," 12 2013. [Online]. Available: http://online.electricity-today.com/doc/electricity-today/et_november_december_2013_digital/2013121101/#10/. [Accessed 14 08 2018].

8. J. S. John, "Regulators to Utilities: We’ll Let You Rate-Base Your Cloud Computing," 08 02 2017. [Online]. Available: https://www.greentechmedia.com/articles/read/regulators-to-utilities-well-let-you-capex-your-cloud-computing#gs.dknNoVI. [Accessed 14 08 208].

9. OpenFog Consortium, "Size and Impact of Fog Computing Market,"

10 2017. [Online]. Available: https://www.openfogconsortium.org/wp-content/uploads/451-Research-report-on-5-year-Market-Sizing-of-Fog-Oct-2017.pdf. [Accessed 14 08 2018].