In a recent pilot project, utilizing commercial and industrial customers’ stationary energy storage battery resources, the performance of large capacity battery storage systems, as they responded to demand response signals from a utility, was measured, analyzed and verified. The monitoring was done with high-quality sensors in addition to the meter information provided by both the utility and battery system. Energy storage systems introduce a host of benefits for the electricity grid, but the behavior of these devices alter the landscape, as soon as the devices are in place and their behavior must be properly modeled in order to maximize their effectiveness.

In many ways, the project was a glimpse into the utility of the future, as utilities will increasingly rely upon distributed energy resources to meet customer expectations. The energy industry is anticipating a shift in operations as distributed (physically located near an end user) battery systems open new markets and more efficient operations in a world where rooftop solar generation is more common. Energy storage systems, batteries, in this case, have capabilities and applications for a variety of grid services that mostly utilize very basic economic theories: Buy and store energy when it’s most available and less expensive, then sell energy back when it’s scarce and more expensive.

This idea is that energy is more valuable at certain times, but energy storage batteries also have potentially valuable applications in the near-term. Both customers and utilities are interested in lowering their peak demand (the highest amount of energy consumed within a given timeframe) as these peaks require the most generation capacity; that marginal capacity often represents the most expensive or highest greenhouse gas emitting electricity generation. The reserve storage capacity of batteries placed at customer sites enables customers to reduce electricity demand and lower any demand charges on their bill. Utilities also have an interest in these customer-sited batteries as their performance impacts utility operation and the stability of the grid.

Energy storage devices find their value in providing a local source for both increasing demand and decreasing system demand by surgically providing capacity/energy. The systems tasked with maintaining the balance of electricity supply and demand must take into account the activities of a swath of energy consumers located in different areas. It’s a complicated task often run by balancing authorities like Independent System Operators (ISOs) and Regional Transmission Operators (RTOs), which have day-ahead markets as well as real-time markets with “ancillary services” working to balance supply, demand and voltage frequency within the four-second timeframe.

The California ISO (CAISO) has set up rules to allow non-generating resources (batteries) to provide ancillary services. On the surface, energy storage (and the services it provides) can be naturally incorporated into the existing wholesale electricity markets, but their inclusion is still predicated on accurate and timely performance in response to dispatch signals. Imagine asking the battery to discharge, but it is already fully discharged. If that were the case, then this “reliability” resource wouldn’t be very reliable.

Not only must batteries perform the requested action when dispatched, they must be precise in the amount provided. If the device does not meet the dispatch instructions from the utility or balancing authority, the energy storage system creates a situation where another resource is needed to compensate in order to maintain the balance between supply and demand. There are a variety of ways that a battery system can deviate from the desired performance with the two basic situations:

- Overperformance: Discharging/exporting more than requested (providing too many kW) or charging/importing more than requested (absorbing too many kW)

- Underperformance: Discharging less than requested (not providing enough kW) or charging less than requested (absorbing too little kW)

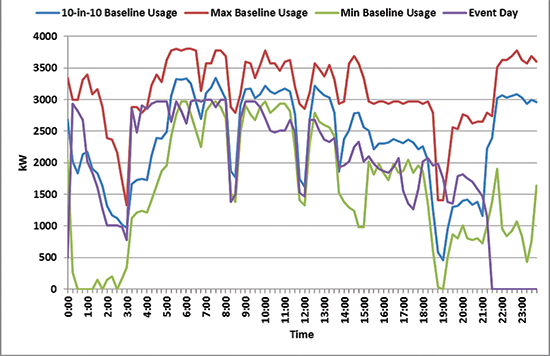

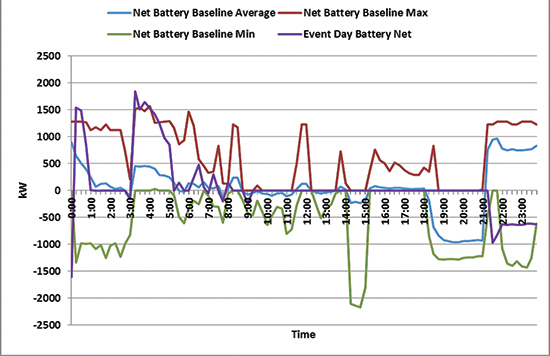

This is a fairly straightforward problem when we have sufficient metering in place – simply penalize the battery for not performing as requested. However, the problem gets immensely more complicated when the battery is placed behind the meter and the utility must attempt to filter through the noise of the other customer loads that are measured by the utility meter. Take a look at the two graphs shown from the pilot project. The first graph shows the utility meter load. The second shows the battery import and export of power.

There is some significant noise in these charts related to “baselines.” The baselines used for this project were known as 10-in-10, an average 15-minute usage from the past 10 non-holiday workdays. These charts also display the maximum the maximum usage across that 10-day baseline period (Max baseline usage) and minimum usage in the same period. “Event day” is the day in which the DR event was called.

With such a large facility load, it’s tough to extract the battery performance from the normal load. This is not a new problem to batteries; it’s been a known problem with demand response programs. How do we quantify performance with such a squishy asset with so many things going on?

The baselining technique that was employed in the pilot project is the most common. There are other variations, including those that the California Baseline Accuracy Working Group recently submitted, but each amount to trying to predict what would have happened in the absence of a demand response event based on historical information. What if production begins late one day? What if the company deploys new, more efficient computers or electric vehicle charging stations? There is a ton of noise.

Any new technology application takes time to refine and distributed energy storage is no different. The study referenced above observed a variety of behaviors and nuances that deviated from the anticipated and desired response of the battery storage system’s demand response performance. The ability of a battery storage system to respond with precision to utility or balancing authority dispatch instructions will be refined over time. However, measuring the performance with a classic demand response baseline approach may prove inadequate for the variety of battery energy storage applications.

Batteries hold tremendous value across a variety of applications, but there are still quantification and compensation problems to solve before we start mass deployment. These baselining problems are acute to batteries placed behind customer meters, but part two of this series will focus on problems that can arise from a lack of foresight or sufficient understanding of the system needs.

About the Author

Sean Morash excels in creating simple solutions of complex electricity sector themes based on a working knowledge of grid modernization related technologies and policies. He is a consultant at EnerNex, where he works across a variety of projects, including helping clients to assess the value of next-generation demand response technologies aiming to capture multiple simultaneous value streams.

Sean Morash excels in creating simple solutions of complex electricity sector themes based on a working knowledge of grid modernization related technologies and policies. He is a consultant at EnerNex, where he works across a variety of projects, including helping clients to assess the value of next-generation demand response technologies aiming to capture multiple simultaneous value streams.